LiveCycle Data Services – Channels and Endpoints Explained!

As a follow-up to my LCDS Quick Start Guide, I wanted to offer more in-depth information about using channels and endpoints with your LiveCycle Data Services application. What is a channel and what is an endpoint ultimately? A ‘channel’ is the term used for the client-side code to manage the connection, and the ‘endpoint’ is the term used for the server-side code or configuration that manages the connection. So for a Flex client to communicate with LiveCycle Data Services, a channel must be defined for the client side to communicate with the endpoint on the server-side. The data is sent across the channel in a network-specific format to the endpoint. The endpoint code (Java) reads the data that comes across the channel and determines which service to route that data to. In this case, a service is just another set of code that will be used to process the data sent over the channel and refers to either a remoting service, a data management service, messaging service or an HTTP Proxy service. The channel and endpoint definition are configured and associated in the services-config.xml file via tags, such as the following:

<channel-definition id="samples-amf" type="mx.messaging.channels.AMFChannel">

<endpoint url="http://servername:8400/myapp/messagebroker/amf" port="8700"

type="flex.messaging.endpoints.AMFEndpoint"/>

</channel-definition>

where the ‘id’ attribute refers to the client side channel and ‘type’ refers to the client-side code class type. The associated endpoint element is included as a child of the channel definition to show what server-side endpoint should be used when that client-side channel is used. The ‘url’ attribute on the endpoint must be unique across endpoints, and points to either the MessageBrokerServlet or an NIO based server depending on what implementation you are using. The above example shows the use of the MessageBrokerServlet.

Christophe Coenraets presents on LCDS and BlazeDS often, and has great summary information in those presentations regarding channel usage. I took details from his slides and summarized them in the text below because I think it’s very valuable information for people to know.

Simple Polling

- Near real-time

- May use when you only expect to have 100-150 connections max.

<channel-definition id="my-amf">

<endpoint url="http://{server.name}:{server.port}/{context.root}/messagebroker/amfpolling"/>

<polling-enabled>true</polling-enabled>

<polling-interval-millis>8000</polling-interval-millis>

</channel-definition>

Long polling with piggybacking

- Similar to traditional poll, but if no data available, server “parks” poll request until data becomes available or configured server wait interval elapses.

- Client can be configured to issue next poll immediately following a poll response making this channel configuration feel very “real-time”.

Pros

- HTTP request/response pattern over standard ports. No firewall/proxy/network issue.

Cons

- Overhead of a poll roundtrip for every message when many messages are being pushed

- Servlet API uses blocking IO. You must define an upper bound for the number of long poll requests parked on the server

Example:

<channel-definition id="my-amf">

<endpoint url="http://{server.name}:{server.port}/{context.root}/messagebroker/amfpolling“/>

<polling-enabled>true</polling-enabled>

<polling-interval-millis>8000</polling-interval-millis>

<piggybacking-enabled>true</piggybacking-enabled>

<wait-interval-millis>60000</wait-interval-millis>

<client-wait-interval-millis>1</client-wait-interval-millis>

<max-waiting-poll-requests>200</max-waiting-poll-requests>

</channel-definition>

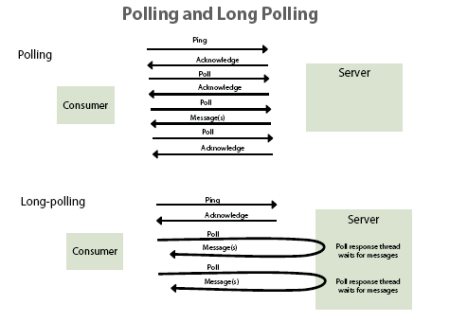

The following illustration is taken from the Adobe LiveCycle Data Services Developer’s Guide and is helpful in showing the difference between for standard polling versus long polling. The description of it, taken from the dev guide is as follows: “In the standard polling scenario, the client constantly polls the server for new messages even when no messages are yet available. In contrast, in the long polling scenario the poll response thread waits for messages to be available and then returns the messages to the client.”

Streaming AMF/HTTP Streaming

- Because HTTP connections are not duplex, this channel sends a request to “open” a HTTP connection between the server and client, over which the server will write an infinite response of pushed messages.

- Uses separate connection from browser’s connection pool for each send it issues to server.

- Each message is pushed as an HTTP response chunk (HTTP 1.1 Transfer-Encoding: chunked).

Pros

- No polling overhead associated with pushing messages to the client.

- Uses standard HTTP ports. No firewall issue and all requests/responses are HTTP so packet inspecting proxies won’t drop the packets.

Cons

- Holding “open” request on the server and writing an infinite response is not “nice” HTTP behavior. HTTP proxies that buffer responses before forwarding them can effectively swallow the stream. Assign the channel’s ‘connect-timeout-seconds’ property a value of 2 or 3 to detect this and trigger fallback to the next channel in your ChannelSet.

- No support for HTTP 1.0 clients.

- Servlet API uses blocking IO. You must define a upper bound for the number of streaming connections you allow.

Example:

<channel-definition id="my-streaming-amf">

<endpoint url="http://localhost:8400/messagebroker/streamingamf"/>

</channel-definition>

RTMP

- Single duplex socket connection to the server.

- If direct connect fails, the Player will attempt a CONNECT tunnel through an HTTP proxy if one is defined by the browser.

Pros

- Single, stateful duplex socket that gives clean, immediate notification when a client is closed. The HTTP-based channels/endpoints generally don’t receive notification of a client going away until the HTTP session on the server times out.

- The player internal fallback to HTTP CONNECT trick to traverse an HTTP proxy if one is configured in the browser gives the same pro as above (technique not available from ActionScript or Javascript).

Cons

- Generally uses a non-standard port so it is often blocked by client firewalls.

- Network components that do stateful packet inspection may also drop RTMP packets, killing the connection.

Example:

<channel-definition id="my-rtmp">

<endpoint url="rtmp://{server.name}:2037"/>

<properties>

<idle-timeout-minutes>20</idle-timeout-minutes>

</properties>

</channel-definition>

NIO Channels

- NIO stands for Java New Input/Output and is basically a Java socket server completely separate from the servlet-based server-side option.

- The same channels as previously described are available through an embedded NIO Server.

- This option offers much better scalability and no configured upper bound on the number of parked poll requests.

- Because the servlet pipeline is not being used, this endpoint requires more network configuration to route requests to it on a standard HTTP port if you need to concurrently service HTTP servlet requests.

- NIO endpoints use the Java NIO API to service a large number of client connections in a non-blocking, asynchronous fashion using a worker thread pool, therefore the limitation of the servlet option using one thread is removed and a single thread of an NIO server can service multiple I/O’s.

NIO AMF example

<channel-definition id="my-nio-amf">

<endpoint url="http://{server.name}:2080/nioamf"/>

<server ref="my-nio-server"/>

<properties>

<polling-enabled>false</polling-enabled>

</properties>

</channel-definition>

NOTE: BlazeDS only supports the following channel types:

- Polling

- Long polling

- Long polling with piggybacking

- HTTP Streaming

Configuring Channels

Channels can be specified on the client-side via the configuration of the -services compiler option in your project setup, or programmed at runtime. If done at runtime, they are coded into the client MXML or ActionScript directly, therefore no –services compiler option is specified on the project. This also means that the specific endpoint URL does not have to be compiled into the client SWF. In this case it is much more flexible in that you could simply create an XML file with your endpoint URLs in it depending on the environment, and connect with an HTTPService in your client to read them in at initialization. More detailed information regarding defining channels can be found here: http://livedocs.adobe.com/livecycle/8.2/programLC/programmer/lcds/help.html?content=lcoverview_4.html

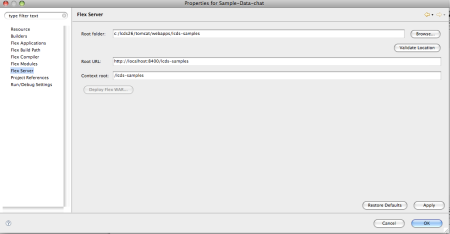

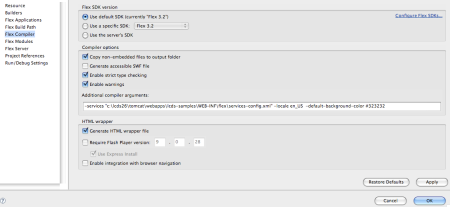

Below are screen shots to show you how to set up your LCDS project with the compiler options etc:

However, as noted in the previous paragraph, you can completely skip all the above project setup and define your channels for runtime use. An example of this is shown below:

<mx:ChannelSet id="channelSet">

<mx:RTMPChannel id="rtmpChannel" url="rtmp://tourdeflex.adobe.com:2037"/>

</mx:ChannelSet>

You can also code it in ActionScript if desired, such as the following:

var cs = new ChannelSet();

cs.addChannel(new RTMPChannel("my-rtmp", "rtmp://localhost:2035"));

cs.addChannel(new AMFChannel("my-polling-amf","http://servername:8400/app/messagebroker/amfpolling"));

You then use that defined ChannelSet on your DataService object as follows:

<mx:DataService id="ds" destination="product2" channelSet="{channelSet}"/>

This can be done for any of the LiveCycle Data Services objects you are working with, including RemoteObject, Producer/Consumer objects for Messaging etc…

An example of this with remoting is below:

<mx:ChannelSet id="channelSet">

<mx:AMFChannel id="amfChannel" url="http://tourdeflex.adobe.com:8080/lcds-samples/messagebroker/amf" />

</mx:ChannelSet>

<mx:RemoteObject id="myService" destination="userService" channelSet="{channelSet}" result="onResult(event)" fault="onFault(event)"/>

Many other examples of this can be found in the Flex Data Access section of Tour de Flex.

General Guidelines

So when to use which channel? Below are some general guidelines. For more detailed information, see the LiveCycle Data Services Developer’s Guide:

- Use AMF channels for Remoting

- The order of preference in choosing channels in general, particularly based on latency (other then when using Remoting):

- RTMP

- Streaming AMF (1st choice for BlazeDS since RTMP is not supported there)

- Long Polling

- Polling

Important Note: you can code multiple channels at runtime and LCDS will use them in order of declaration. If there is a problem, such as a firewall issue with RTMP, then the next channel in the code will be tried. It is a good practice to add a regular polling channel as the last channel in order since it will always work as a fallback. An example of how this would look in the code is shown below:

<mx:ChannelSet id="channelSet">

<!-- RTMP channel -->

<mx:RTMPChannel id="rtmp" url="rtmp://tourdeflex.adobe.com:2037"/>

<!-- Long Polling Channel -->

<mx:AMFChannel url="http://tourdeflex.adobe.com:8080/lcds-samples/messagebroker/amflongpolling"/>

<!-- Regular polling channel -->

<mx:AMFChannel url="http://tourdeflex.adobe.com:8080/lcds-samples/messagebroker/amfpolling"/>

</mx:ChannelSet>

And lastly… if you’re like me and really need to understand what is happening under the covers with all of this rather than assuming the magical black box, I recommend reading at least this one page of the Adobe LiveDocs for LCDS. It was eye-opening for me!

July 15, 2009 at 8:41 pm

[…] COMET server technology. You definitely want NIO enabled for scalability. Here’s a recent blog with provide more information on channels and […]

July 22, 2009 at 6:56 pm

[…] The channels that are used for the actual transport can vary dramatically depending on the needs. Here is a great blog that explains the different transports. No matter what transport / channel is used the API in Flex […]

August 3, 2009 at 8:56 am

[…] Good observation by Michael Slinn – do not use HTTP compression when using HTTP Streaming channels – you will not be able to receive any messages from the server. If you want to understand better how HTTP Streaming works read this article. […]

November 24, 2009 at 6:31 pm

Hello i have a question about data services, if i hace two instances of the same ds, why does a change in one ds affects the other instance as well, example:

dsOne

dsTwo

if i have made a change in a collection associated in dsOne, affects commitRequired in the dsTwo

best regards

January 8, 2010 at 1:41 am

it is smipley superb to some one like me to whom endpoints and channels are little agnostic

March 29, 2010 at 10:37 pm

[…] If you need a more thorough round-up of endpoints, I highly recommend DevGirl’s excellent endpoint explanation. […]

September 9, 2010 at 4:18 pm

[…] a persistent connection. You can find more information about endpoints in Holly Schinsky’s blog post and in the […]

September 16, 2010 at 4:59 pm

[…] a persistent connection. You can find more information about endpoints in Holly Schinsky’s blog post and in the […]

November 8, 2010 at 11:21 pm

[…] a persistent connection. You can find more information about endpoints in Holly Schinsky’s blog post and in the […]

January 13, 2011 at 10:13 am

Thanks DevGirl,

The level of detail and clarity of your blog is very useful. Keep on posting.

One question on the channels, could you please elaborate on what port settings define. I understand the server port setting (of the url) but what about that 2nd one ? in this example it has a value of 8400.

Regards,

coderunner

January 13, 2011 at 10:14 am

OOps , i meant the 2nd port has a value of 8700 in the example.. what does that refer to ? the outgoing port on the server ???

thanks

coderunner

January 13, 2011 at 12:50 pm

Hi coderunner, first of all thanks!! I’m always hoping people find useful tidbits on this blog :).

Also, thanks for asking about this. I believe that port is actually redundant and misleading in that sample and I need to update that. The port 8400 specified on the URL should be all that is needed. Thanks! Holly